Tune up your Leaf/Spine network:

- Better fabric performance through ECMP tuning

- Non-disruptive failover

- Hitless upgrades

- Automated provisioning

- Stretching VLANS & multi-tenant security

- Simple IP Mobility

- Decrease optics spend

- Eliminate licenses for basic features

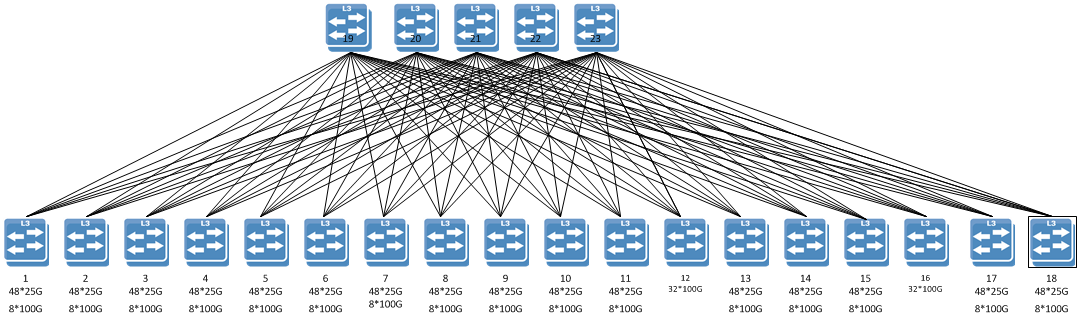

The way modern leaf/spine topologies work is that all switches operate at Layer 3 and use a routing protocol, like BGP or OSPF, to advertise the reachability of their local networks. All traffic from a leaf gets load balanced across a number of spine switches.

The key technology that enables leaf/spine topologies is Equal Cost Multi Pathing (ECMP), which the leaf switches use to send traffic evenly across multiple spine switches. ECMP is a standard, non-proprietary, feature available on all modern switches.

However, not all switches are created equal, so here there are some ways to tune up your leaf/spine network:

Table of Contents

Tune up #1: Better Fabric Performance through ECMP Tuning

When a switch load balances packets across multiple paths, it does not simply round robin each packet out a different link because that would allow packets to arrive out of order and most hosts just discard out of order packets. So, instead of round-robin forwarding, switches distribute packets across equal paths in a way that forces all packets from a given flow to always take the same path through the network. This deterministic forwarding is accomplished by making the path selection using packet fields that stay consistent for the entire session. For example, a switch could just hash on the source IP address and then all the packets from a given server would always take the same path. Every data center is a bit unique, with unique traffic patterns, address schemes, and applications, so if traffic isn’t load balanced well, the ECMP parameters used for load balancing may need to change, and it is considered a best practice to use as many tuples (or variables) as your switches allow.

Solution: better hash keys

With larger networks, you should use different ECMP parameters for the spine than what is used on the leaf or super-spine switches. Using the same ECMP hash keys at multiple tiers can lead to polarized traffic flows (which is a bad thing), where all traffic gets sent on a single path leaving remaining paths unused. For example, you may want to use SIP+DIP at the Leaf, and use SIP+DIP+DPORT on the Spine switches.

Solution: use different ECMP keys in Spine than what is used for the Leaf switches

Trouble with old switches:

To get line rate performance, ECMP forwarding decisions are executed in hardware – inside the switch ASIC. Some switches do this very well, but older switches only work really well if the number of paths is a power of 2. Meaning, if there are 2 or 4 or 8 paths, then they get good distribution, but for 3 or 5 paths, they get really bad distribution where one path gets overloaded while others go unused:

The root cause is too few ECMP hash buckets in the switch ASIC. The switch ASIC has thousands of hash buckets, so it gets great traffic distribution – regardless of the number of links.

Tune-up #2: Non-Disruptive Failover

When a path fails in traditional ECMP, all flows in the network can get disrupted. The reason for this is that when a path fails – all the flows in the ECMP group get redistributed across the remaining paths – even flows on the non-failed links are rebalanced and get disrupted. This rebalancing of flows leads to out of order packets and retransmissions which momentarily disrupts services across the entire IP fabric. Then, when the path is restored, this disruption gets repeated as all the flows get rebalanced again, further upsetting the applications flowing over this infrastructure.

Resilient Hashing solves this problem by rebalancing only those flows that were assigned to the path that failed – the flows on the un-impacted paths are left undisturbed:

Solution: Resilient Hashing

What if your switches don’t support resilient hashing?

Some folks will use layer 2 Link Aggregation (or LAG) on a pair of links between each leaf & spine in an attempt to minimize this problem. This workaround allows a single link to fail and no traffic will be rebalanced. The downside is that no traffic will be rebalanced and you will get less than ideal traffic distribution. It also provides no benefit if a spine switch goes offline.

Tune-up #3: Hitless upgrades

In the old days of networking, updating a big modular switch meant taking down half the network. This necessitated all upgrades to be scheduled around specific maintenance windows. Now, the first time you update a big switch, you might trust a vendor’s In Service Software Upgrade (ISSU) feature – that is until you discover it frequent won’t work for “major” software upgrades which commonly include SDK upgrades, changes in the ISSU functionality itself, or an FPGA/CPLD firmware re-flash. With the leaf/spine approach, updating a switch becomes a trivial event:

Step 1: change the route cost on the switch and watch flows move away from it until all traffic has been redirected to other switches

Step 2: upgrade the firmware & restore the route cost

This upgrade process can easily be automated. In fact it should be automated, as another data center best practice is to automate anything that needs to be done more than once. Which brings us to the next topic:

Tune-up #4: Automated Network Provisioning

There is a long held axiom that for every $1 spent on CAPEX, another $3 gets spent on OPEX. The key to lowering that OPEX to CAPEX ratio is through automation.

Automation is needed for any large network and even more so with leaf/spine topologies. Server automation tools like Puppet, Chef, and Ansible are now being applied to networks. Ansible, in particular, has gained in popularity and is a great tool for enabling Zero Touch Provisioning (ZTP) for your network. With ZTP, you can RMA a switch and the replacement switch is automatically provisioned with the correct IP addresses, configuration, and routing profile. We provide sample Ansible scripts to make it easy for folks to start automating their networks.

One way to make it really easy to automate is to use a feature called IP unnumbered, which, besides conserving IP addresses, makes the configuration very simple, and, in some cases, the only config difference from one switch to the next is the loopback address. Another automation trick is to reuse the same pair of ASN numbers on all your ToRs to further simplify the switch configs.

Automation pays additional dividends by making your network more reliable. The majority of data center outages can be attributed to a lack of automation. Either because of a simple fat-finger mistake where someone misconfigured a switch, or because a security policy was not followed. When everything is automated, there are no fat-finger mistakes and security policies get implemented every time a switch is configured.

Tune–up #5: Stretching VLANs between racks and multitenancy

With most leaf/spine designs, each rack has a unique subnet or broadcast domain – which is a good thing since that limits how far trouble can spread. However, it also keeps VMs from being able to live migrate from one rack to another because their VLAN is effectively trapped to a single rack. The usual solution for this has been to stretch the VLAN across Layer 3 boundaries using an overlay technology like VXLAN and, historically, this was done with Software VTEPs on the servers. These Software VTEPs were orchestrated by a centralized controller that would corral all the VTEPs and share the MAC reachability between the servers.

But now there is a controller-free VXLAN solution, called EVPN, which is great for putting a VLAN anywhere in the data center, or even stretching VLANS between data centers in some cases. One of the key benefits to using EVPN is that the VXLAN tunnels terminate on the switches which makes it easy to deploy with bare-metal servers.

Tune-up #6: Simple IP Mobility without Overlays

A major trend in larger data centers is to use Routing on the Host (RoH) to provide IP Mobility, allowing Containers to move anywhere in the data center. RoH is particularly useful for Software as a Service (SaaS) data centers because, in many cases, there is just one “tenant” on the network. As such, they don’t need VXLAN to carve up their network, but they do need to move containers anywhere in the data center. A simple way to solve this problem is called Routing on the Host where a routing protocol is running the server. With RoH, every container gets a unique /32 IP address which is advertised by the host server to the rest of the network using a dynamic routing protocol.

This is a very simple approach that eliminates the need for Controllers and complex NAT schemes. It further simplifies the network as it also eliminates the need for a slew of protocols like Spanning tree, Loop-guard, Root guard, BPDU Guard, Uplink-Fast, Assured Forwarding, GVRP, or VTP. Your datacenter becomes almost a one-protocol environment, which minimizes configuration and troubleshooting efforts.

With RoH, the servers also support ECMP, so there is no need for LACP/Bonding on the servers or proprietary features like VPC or MLAG on the switches. This also frees up the MLAG ISL ports on the Top of Rack switches to use for forwarding traffic. Another benefit is that you are no longer limited to 2 TOR switches per rack, and could dual/triple/quadruple-home your servers.

However, as simple as it sounds, RoH is usually reserved for more modern DevOps environments where there are no division between the server and network teams.

Tune-up #7: Reduce your spend on Optics

Don’t believe people telling you that connecting servers to an End of Row chassis switch will reduce costs because it eliminates the need for a ToR. Just add up the optics and cabling costs and you’ll be very happy with the price of the ToR, not to mention your DC design will be nice and clean. Other things you can do to lower your optics spend is:

- Avoid buying optics from a network vendor that doesn’t actually make optics. Most network vendors just relabel generic optics and then charge a huge markup while providing no additional value

- Use Active Optical Cables (AOCs) instead of transceivers. This is a cost-saving tool used in the largest supercomputers in the world. AOCs are basically fiber cables with the transceivers permanently attached which reduces costs.

- In large topologies, place the spine and super-spine close together so you can use inexpensive copper DAC cables for those connections

Tune-up #8: Eliminate Licenses for basic features

Some network vendors use bait-and-switch tactics where they quote you a great price for the switch hardware, win your business, and then later explain that you need to pay extra for basic features like BGP or ZTP. Nobody pays extra to PXE boot their server, why would you pay extra to network boot a switch?

Reduce what you pay for “table stakes” features like VXLAN, BGP, EVPN, ZTP, Monitoring, and Mirroring by using one of two options:

Option 1: The best way to reduce those license costs is to use a network vendor that doesn’t play those tricks

Option 2: Introduce a 2nd vendor into your network and split your IT spend. Those licenses have zero actual cost, so use your second vendor as leverage get the “bad” vendor to throw the licenses in for free. Or, you know, you could just increase your spend with the “good” vendor (see option 1)

You shouldn’t have to pay exorbitant license fees for basic features like ZTP, BGP, or VXLAN. These aren’t exotic features. They are basic features that are expected in modern data center class switches. These licenses are like car-salesman tricks, trying to get you to pay extra for “undercoating” and “headlight fluid”.